This post will walk you through a particular approach for efficiently importance sampling huge numbers of lights in a scene. I call the approach "Light Trees".

This post has been a long time coming. I actually wrote about this technique (tersely) back in 2014 on ompf2.com. But I never got around to writing about it properly on this blog. So I'm fixing that now!

Before I get started, I want to make it clear that I am not the first person to come up with this idea. In particular, the Manuka guys have apparently been doing something like this since the inception of their renderer. And there are several other people that apparently came up with pretty much the same technique around the same time as me.

Really, the core approach is super straightforward. And the fact that I (of all people) independently came up with it is a strong indication that it's pretty obvious. After all, I'm an animator professionally, not a software developer.

So my goal in this post is not to claim any credit for the idea. Quite the contrary—given how many people have independently come up with it, I don't think anyone can reasonably claim credit for it.

Rather, the goal of this post is to clarify and distill the approach, since the papers I've seen about it describe more detailed or complex variants. I discuss those papers and their contributions at the end of this post.

The Many Lights Problem

The problem we're trying to solve with light trees is sometimes called the "many lights" problem. If you're already familiar with this problem and with importance sampling, then please feel free to skip to the "Light Trees" section. These first two sections are just filling in that background.

In a typical forward path tracer, when you want to calculate lighting at a point on a surface, you generally do two things1:

- Sample the light(s) in the scene to calculate direct lighting.

- Shoot one or more rays out in random directions to calculate indirect lighting.

The many lights problem shows up in that first step.

Calculating direct lighting is really easy if you only have one light: just shoot a shadow ray at the light, and if the ray reaches it, calculate the lighting. But what if there's more than one light? In that case, there are two broad approaches:

- Sample all lights.

- Pick one light at random.

The first approach—sampling all lights—works really well:

The second approach—sampling one light at random—also works well enough, but results in some noise due to the random selection:

Both approaches are fine. The former takes longer to render because it needs to do more calculations per shading point, but produces less noise. The latter renders faster but produces more noise, which takes more samples to get rid of. Which approach is better depends on the particular scene.

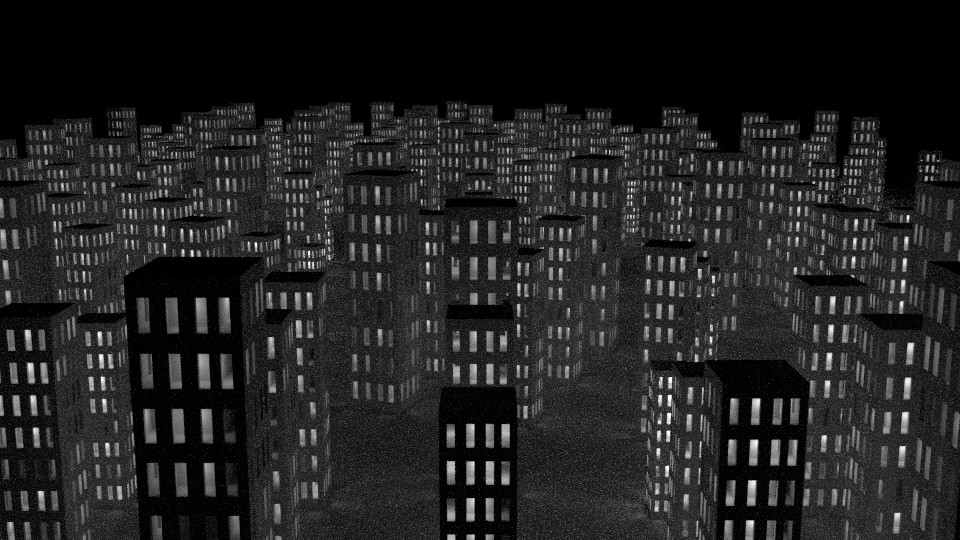

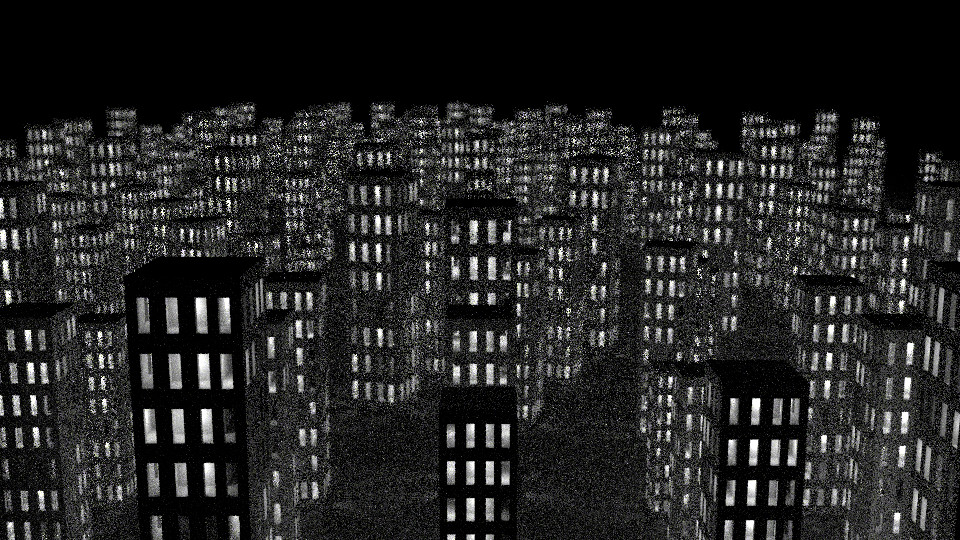

The many lights problem starts to show up when there are... eh... many lights. A large number of lights. For example, I have a test scene that contains roughly 8000 lights. Rendering this scene by sampling all lights looks like this2:

It doesn't look too bad. But it took 3 hours to render at only 16 samples per pixel, which is about 11 minutes per sample. That's because we have to spawn 8000 shadow rays on every ray hit, and potentially do as many lighting calculations. Imagine the render times on an actual production scene with even more lights, and at the sample rates needed to converge to a sufficiently noise-free image.

You might think that randomly selecting lights will save us. And, indeed, that brings our render time down to 6 seconds. But the result is so noisy that it's unusable:

That's because the probability of selecting a light that matters to the shading point is so low. Even if we crank our samples into the thousands, the render would still be somewhat noisy.

So it seems like we're stuck, then. Either our render times are intractable, or our renders are unusably noisy. And that, in a nut shell, is the many lights problem.

Importance Sampling

The answer to this problem is something called importance sampling3.

With approach #2 (random selection) we have a lot of wiggle room in how we select our lights, and the idea behind importance sampling is to use that wiggle room to sample important things with higher probability.

As an example, let's make a scene with two lights, with one of the lights much brighter than the other. If we render it with equal probability of selecting either light, it looks like this:

But if we use importance sampling to select the brighter light with higher probability, it looks like this:

So is that it, then? We just select the brighter lights more often, and we're good to go?

Unfortunately, no. You can get a hint of why that won't work by noticing that the area under the dimmer light actually gets more noisy in the importance sampled image.

The problem is that even though one light is brighter than the other, that doesn't mean it actually contributes more light to the point we're shading. For shading points that are sufficiently close to the dim light, the dim light is effectively the brighter one.

For a simple scene like the one above, that's not a big problem. But for something like the city scape scene it's a huge problem. First of all, all the lights in that scene are about the same brightness, so even with brightness-based importance sampling we would just be selecting them equally anyway. But also, even if some of the lights were brighter, what are the chances that any given light has much effect on any given shading point? Very low.

So what we actually want is to somehow sample the lights in proportion to the brightness they contribute to the current shading point. If we do that, things look way better:

But... how do we do that?

Light Trees

(To summarize for those that skipped to this section: our goal is to select a light from all lights in the scene according to their contributions to the current shading point.)

The naive way to do this would be to calculate the shading from all the lights, and then select a light based on those results. But that doesn't get us anywhere because then we're just sampling all the lights anyway.

So instead we need some efficient way to approximate that. And that's exactly what light trees let us do.

A light tree is a pretty straightforward data structure: it's just a BVH of the lights in the scene, where each node in the BVH acts as an approximation of the lights under it. For it to be an effective approximation, that means we also need to store some extra data at each node.

The simplest kind of light tree would simply store the total energy of all the lights under each node. You could then treat each node as a light with the same size as the spatial bounds of the node, and emitting the same energy as the lights under it.

I'm going to use that simple light tree variant to illustrate the technique. But I'll discuss some possible extensions in a bit.

Okay, so we have our light tree as described above, and we have our shading point on a surface. How do we select a light?

Starting from the root of the tree, we:

- Calculate the lighting (or an approximation thereof) from each of the child nodes.

- Take those lighting results and select which child to traverse into based on them. For example, if the left node contributes twice as much light, then we'll be twice as likely to traverse into it.

- If the selected child is a leaf, return the actual light under it. Otherwise, go back to step 1 with the selected child.

So that's the basic idea. But there are a couple of further details:

- We also need to return the probability of selecting that light. This is because the calling code needs to weight that light's contribution based on that probability.

- We need a good "approximate lighting" function to use in step 1. Incidentally, I like to call this the "weighting" function, because we only use it to determine the weighted probability of traversing into each node.

Calculating the light-selection probability is pretty easy: we just combine the probabilities of all the child nodes we traverse into, which is just multiplying them all together.

Coming up with a good weighting function is trickier. The ideal weighting function would exactly calculate the lighting from all the individual lights under the node. But if we could do that efficiently then we wouldn't need the light tree in the first place! So instead we need something that approximates that well. I'm going to punt on this for now, but we'll come back to it later. For the moment, just assume we have a reasonable weighting function.

Given all of those things, our light selection process is the following pseudo code, where tree_root is the root node of the light tree, shading_point is the data of the point we're shading, and n is a random number in the range [0, 1):

def light_tree_select(tree_root, shading_point, n):

node = tree_root # Updated as we traverse.

selection_p = 1.0 # Selection probability, also updated as we go.

loop:

if is_leaf(node):

return (node.light, selection_p)

# Child node weights, or "approximate lighting".

child_1_w = weight(node.child_1, shading_point)

child_2_w = weight(node.child_2, shading_point)

# Normalized probabilities of traversing into either child.

child_1_p = child_1_w / (child_1_w + child_2_w)

child_2_p = 1.0 - child_1_p

# Select the child for the next loop.

if n < child_1_p:

node = node.child_1

selection_p *= child_1_p # Accumulate probability.

n /= child_1_p # Scale random number.

else:

node = node.child_2

selection_p *= child_2_p # Accumulate probability.

n = (n - child_1_p) / child_2_p # Scale random number.

And that's it! Well, modulo some floating point corner cases. But that's the entire thing. Really.

One thing I didn't mention yet: you need to scale the random number on each traversal step. That's what lets us get away with using just one number, instead of needing a new random number every time we choose a child. I'm feeling too lazy to explain how it works in detail, but you can think of it as the tree whittling down the space of the random number as it traverses deeper and deeper.

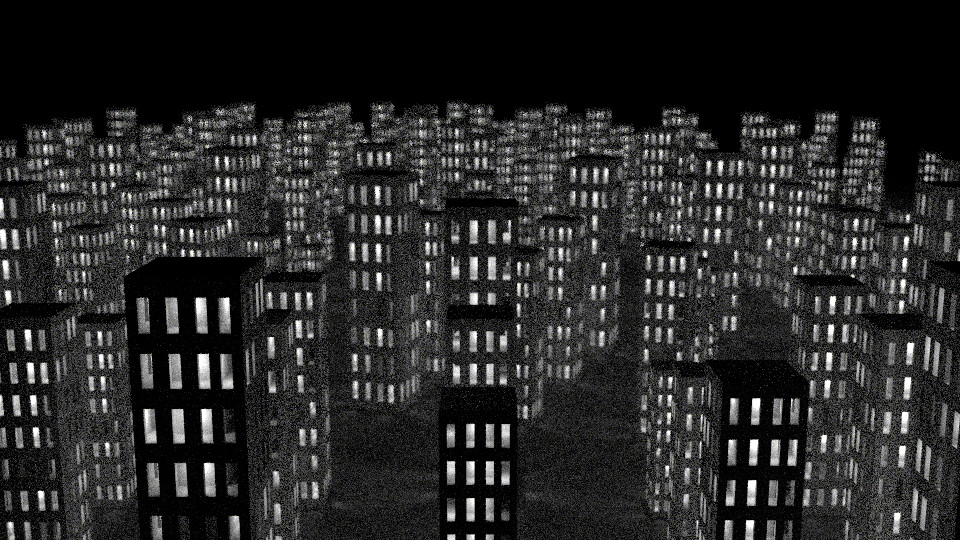

Anyway, if we implement that pseudo code, we get this beautiful result at 16 samples per pixel:

This particular render uses a pretty simple weighting function: it assumes the shading point is lambert, treats the node as a spherical light, and just uses the analytic formula for lambert shading from a spherical light4.

That isn't an especially good weighting function for anything other than diffuse surfaces. But it works surprisingly well in many cases. It's definitely not sufficient for a robust implementation, however.

Potential Extensions and Improvements

Starting from that base, there are a bunch of potential improvements we could explore. For example:

- A better weighting function that handles, among other things, a wider variety of materials well. This is more-or-less necessary for a robust implementation.

- Storing more information than just total energy in the nodes, and using that for better weighting. For example, storing the approximate directionality of lights in cases such as spot lights and some area lights.

- Alternative tree constructions. For example, using something other than the surface area heuristic to cluster lights, or using higher-arity trees.

- Accounting for shadowing (somehow...).

- etc.

The core idea—using a hierarchy to approximate light importance—is always the same. But the potential for variety within that is huge.

As a quick example, this render:

...is also 16 samples per pixel, but (if you flip between it and the last render) it's clearly far less noisy. This was achieved by playing with some of the things above.

Further Reading

There are two published papers I'm aware of that describe variations on this light tree approach, both of which are very much worth reading:

- Importance Sampling of Many Lights With Adaptive Tree Splitting by Conty and Kulla (2017)

- Stochastic Lightcuts by Cem Yuksel (2019)

Importance Sampling of Many Lights With Adaptive Tree Splitting is, in my opinion, the more significant paper of the two. It covers a large breadth of things including:

- A novel tree construction approach that goes beyond the SAH, accounting for factors unique to light trees.

- A way to account for the directionality of lights in the tree and to use that information to improve weighting calculations.

- A novel "tree splitting" approach for returning multiple lights with reduced variance.

The main downside is that their weighting functions don't work well without their tree splitting approach, and can approach very high variance without it. (In fact, the Stochastic Lightcuts paper makes comparisons to it without the tree splitting, which highlights how poorly it performs in that case.)

Stochastic Lightcuts proposes the same technique as both this post and my 2014 post on ompf2, but frames it as an extension to lightcuts. I personally find that framing confusing, and it actually took me a couple readings to realize that it is, in fact, the same technique. But some people might find that framing easier to understand, especially if they're already familiar with lightcuts.

However, I believe the significant contribution of Stochastic Lightcuts is the weighting function they propose, which is based on the lightcuts error bounding function, and accounts for different surface materials and lights with directional components. Moreover, unlike the weighting function from the Adaptive Tree Splitting paper, it works really well with the standard traversal approach—no tree splitting required. I would definitely recommend this paper as the baseline for any real implementation of light trees, and then explore improvements from there.

Footnotes

-

This is a simplification, of course. But it's sufficient for illustrating the problem.

-

If you compare to later renders in the post, you'll notice that it doesn't quite match. That's because this one was rendered in Cycles, but the others are rendered in Psychopath. Psychopath doesn't support sampling all lights, and I didn't feel like implementing a whole feature just for illustration purposes.

-

For a more thorough introduction to importance sampling, I strongly recommend the amazing PBRT book, which is available for free online. (But is also very much worth buying!)

-

See the paper "Area Light Sources for Real-Time Graphics" by John M. Snyder for the appropriate formula.