Interpolating object transforms is a critical task for supporting transform motion blur in a 3d renderer. Psychopath takes a rather brain-dead approach to this and just directly interpolates each component of the transform matrices. This is widely considered to be wrong. The recommended approach is to first decompose the matrices into separate translation/rotation/scale components before interpolating.

In this post I'm going to argue that, for motion blur, the brain-dead approach is not only perfectly reasonable, but actually has some important advantages.

Deformation motion blur.

Before discussing transform motion blur, let's take a brief detour into the world of deformation motion blur.

Deformation motion blur is used when a model is morphing or deforming in some way. A common example of this is animated characters such as animals or people. There is no simple transform that can describe what is happening to the mesh of a character when it's moving. In theory, the renderer could implement the entire rig used to animate the character, but that's not practical—particularly if you're trying to support multiple authoring applications (Maya, Blender, Houdini, Cinema 4d, etc.).

So instead, deformation motion blur is typically done very simply: the authoring application provides multiple snapshots of the mesh at different times, and the renderer linearly interpolates (or "lerps") the positions of each vertex between those snapshots.

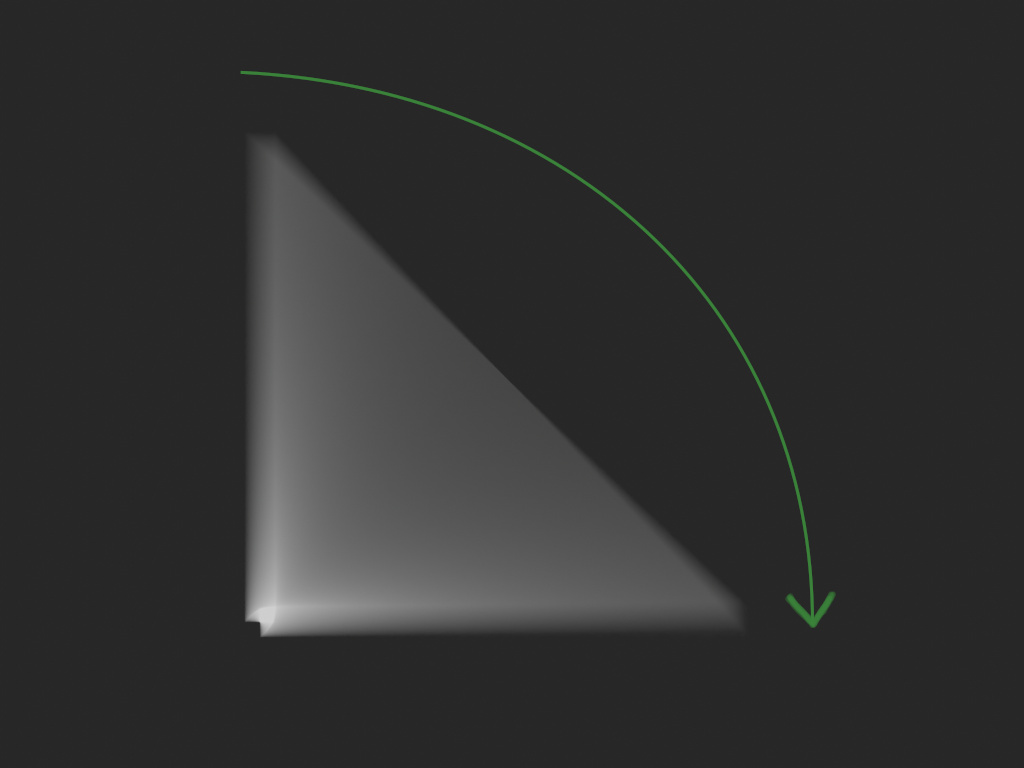

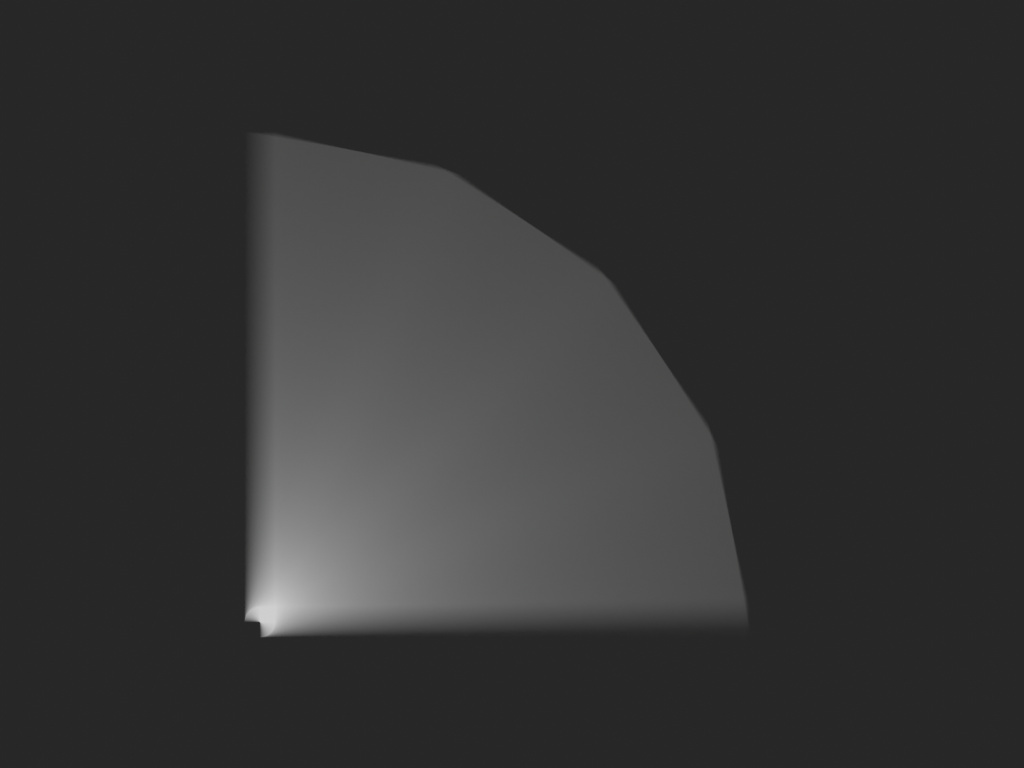

Even though this approach is intended for things like deforming characters, it can also be used for simple transform-like motions. For example, a 90 degree rotation using deformation motion blur with two snapshots looks like this:

Instead of a circular arc like you would expect, there are just straight lines. This is because each point in the model is directly lerped between the snapshots. To fix this, you can feed the renderer more snapshots to get finer and finer linear segments. For example, here's the same rotation with five snapshots:

Regardless of whether this approach is used for complex deformations or simple transforms, these results are never "correct". They don't replicate the exact motion as it was in the authoring tool. But thankfully, that strict level of correctness isn't necessary.

With motion blur we're trying to get a photographic effect, not animate things across multiple frames. And for that purpose, approximations are good enough. In practice, the vast majority of motions only need a handful of linear segments to be approximated with good accuracy. And in the rare cases where more snapshots are needed we can crank them up as much as we need.

Approaching motion blur this way is essentially the motion equivalent of modeling arbitrary surfaces with lots of triangles. You can approximate any surface with triangles, and you can approximate any motion path with line segments. And when you need more resolution, you just add more of them.

Transform motion blur: two approaches.

Now let's come back to transform motion blur. There are two basic approaches to interpolating object transforms in an offline renderer:

-

Direct matrix interpolation. This is where you just interpolate each component of the transform matrix individually.1 (This is the brain-dead approach.)

-

Decomposed transform interpolation. This is where you first decompose the matrix into separate translation, rotation, and scale2 components, and then you interpolate those.

Decomposed transform interpolation as it's usually implemented today was first proposed in the paper Matrix Animation and Polar Decomposition by Shoemake et al. It's a great paper, and worth a read on its own. It also covers the benefits of decomposed interpolation over direct matrix interpolation.

But if you don't want to read the paper, the main benefit is this: decomposed interpolation preserves the scale of rotating objects, whereas direct matrix interpolation doesn't. This is also the main reason people recommend against direct matrix interpolation for motion blur, as exemplified in e.g. the PBRT book.3

At first glance, that's really convincing. Of course you don't want your objects to scale erroneously while rotating! But not so fast. Let's take a look at what these two approaches actually look like, using a simple example.

A spinning planet.

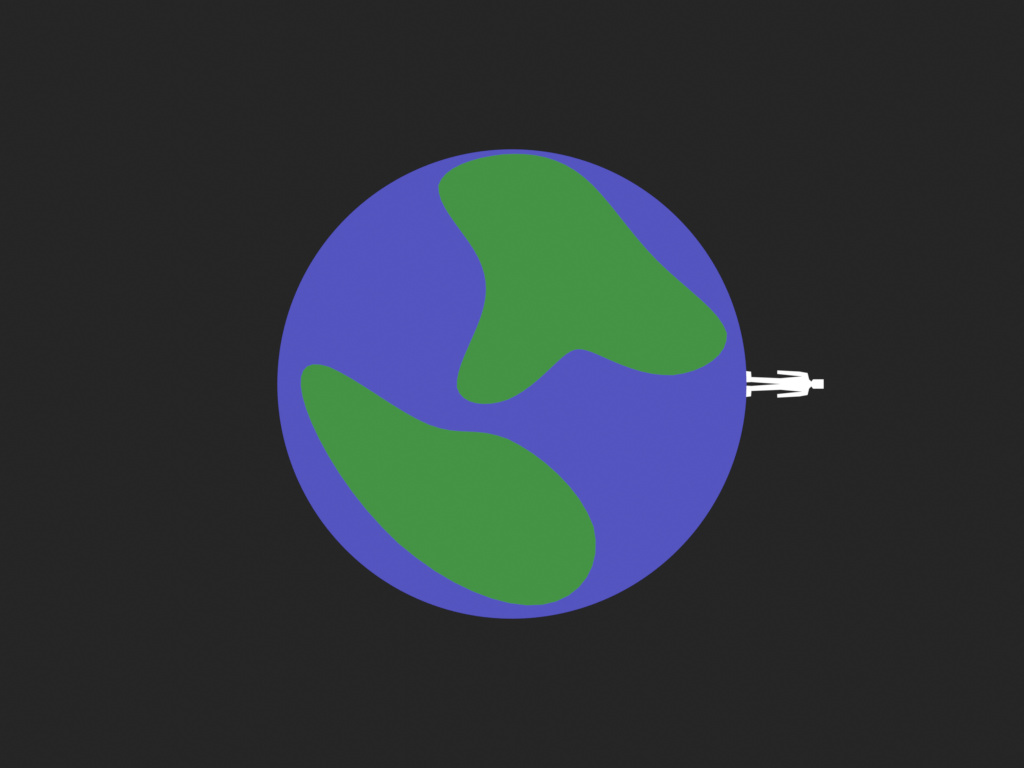

The example is a rapidly spinning planet with a character on it. The character is supposed to remain on the planet's surface, as if their feet were glued down. Here are the start and end positions that we will be interpolating:

(This is admittedly a contrived example, but it's meant to be illustrative, not representative.)

If we use the brain-dead direct matrix interpolation approach, then the half-way point looks like this:

This demonstrates exactly the issue that people warn against: both the planet and the character are scaled down when they shouldn't be. This is clearly a wrong result!

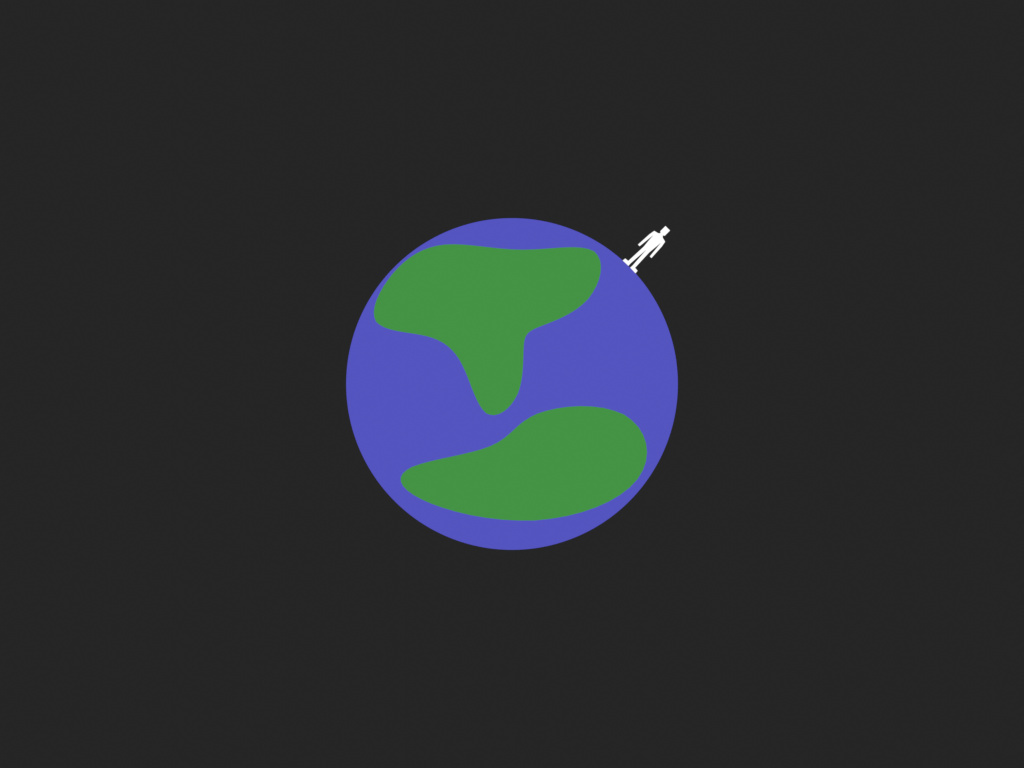

As mentioned above, the typical fix is to use decomposed transform interpolation instead. The half-way point using that approach looks like this:

So it works. Sort of. The planet now maintains its scale. But the character has seemingly vanished. An x-ray view reveals what happened:

The character has sunk into the surface of the planet. This is also clearly a wrong result!

This example highlights that there's actually a trade-off between these two approaches:

- Direct matrix interpolation preserves relative positions, but sacrifices scale.

- Decomposed interpolation preserves scale, but sacrifices relative positions.

The reason for this becomes more obvious if we look at the full motion-blurred results. Here's direct matrix interpolation:

And here's decomposed interpolation:

With direct matrix interpolation, all points follow simple linear paths4, just like deformation motion blur. And that's why the character's feet stay properly planted: if any two points share a position in every snapshot, then they will interpolate together as well, regardless of what transforms put them there. Those linear motion paths, however, are also the reason for the erroneous scaling.

With decomposed interpolation, however, the surface of the planet follows a circular motion path, which preserves scale. And the character's rotation around its own origin does as well. But its translation follows a linear path. This mismatch between circular and linear motion paths is what causes the character to erroneously sink below the planet's surface.

It might seem like decomposed interpolation is still better. It's at least preserving the object's scale, which makes it more correct. And as you add more motion segments, the path of the character will converge to remain properly on the planet's surface.

However, you can say exactly the same thing, but reversed, about direct matrix interpolation: it at least preserves relative object positions, which makes it more correct. And as you add more motion segments, the objects will converge to retain their scale.

Renderers aren't animation packages.

As an animator and rigger, I don't see much distinction between these two approaches in terms of correctness. From my perspective, both are wrong. And meaningfully so, as demonstrated in the example above.

The root issue is that by the time scene data reaches the renderer, all of the relevant constraints on motion (whether from rigging, carefully hand-crafted animation curves, or simulation) have long since been discarded. There's no way for the renderer to know how the objects are supposed to behave. Maybe they're supposed to shrink! Or maybe the planet and character are part of a complex mechanical system that gives them a sophisticated and very specific motion relationship.

The bottom line is that there's no way for the renderer to know. The only data the renderer has is some transforms at specific snapshots in time. And the only thing it can do with that data is some kind of basic interpolation.

This is a really important distinction between motion blur in offline renderers vs more general transform handling in things like game engines or authoring tools. A game engine knows how its objects are supposed to behave. And an authoring tool lets a person craft motion according to their intentions. But when you're just doing raw interpolation for motion blur, you have essentially zero insight into any of that.

Renderers fundamentally rely on having enough data points to approximate the desired motion with sufficient accuracy. Without enough data points, it will be wrong no matter what interpolation approach you take.

It's all about tradeoffs.

Direct matrix interpolation treats all motion blur as simple per-point linear interpolation. This comes with the benefit of being simple and predictable, and making it easy to determine how many motion segments are needed for an object's animation. It also has the benefit of behaving identically to deformation motion blur, so from a quality-tuning perspective all motion blur can be treated the same way.

It also has the nice property of keeping coincident points coincident, which can be useful for co-moving objects and for preventing erroneous geometry intersection/separation. Moreover, you even get these benefits when using deformation and transform motion blur in combination—e.g. a deforming character wielding/wearing a transforming prop—since they're both interpolating in exactly the same way.

Finally, direct matrix interpolation doesn't care about object origins, which can be an advantage when an object's motion isn't consistent with its origin (e.g. if it's part of a larger rig). Direct matrix interpolation always interpolates with the same straight-line paths regardless of object origin, whereas decomposed interpolation is highly dependent on object origin and can result in bizarre interpolations if an object's origin and intended motion are sufficiently mismatched.5

On the other hand, decomposed interpolation preserves object scale. And although not all objects are rigid bodies, a large majority of the objects animated via transforms are. So scale is a useful thing to preserve by default. It's also probably less surprising to non-technical users. And for rapidly spinning objects like wheels or propellers, where the scaling would really become noticeable, decomposed interpolation can significantly reduce the number of motion segments you need—as long as the object origins are well placed.

I think the pros of each approach basically break down like this:

Direct matrix interpolation:

- Preserves relative positions.

- Is easy to reason about, since it's just line segments. Especially when things like shear and/or poorly placed object origins are thrown into the mix, this can be a real boon.

- Matches perfectly with and composes with deformation motion blur.

Decomposed transform interpolation:

- Preserves scale.

- Rotating objects will follow circular arcs, which is especially useful for rapidly spinning objects.

- Better matches user intuition in simple cases.

Importantly, since neither approach gives reliably correct results, any production-oriented renderer needs to support multiple motion segments anyway.6 And if you take that as a given, then both approaches can achieve arbitrarily accurate results by just throwing more motion segments at them.

Wrapping up.

In Psychopath, I decided to go with direct matrix interpolation.7 To my sensibilities there's something elegant about all motion blur just being about individual points moving along paths in space. I also like having all motion blur treated uniformly, regardless of whether it's from deformations or transformations. And from a production standpoint, I think avoiding erroneous intersection/separation of co-moving geometry is actually a significant benefit.

Lastly, I just really enjoy seemingly brain-dead solutions that actually turn out to be really good.

But the real point I'm trying to make with this post is that both approaches are perfectly good solutions for motion blur in an offline renderer. So let's put to bed this idea that direct matrix interpolation is somehow "wrong". Because at least in this context it's not. It's just making a different set of legitimate tradeoffs.

Footnotes

-

Something important that I've elided in the main content of this post is that direct matrix interpolation only has the nice properties I discuss if you interpolate the object-to-world matrices. If you interpolate the world-to-object matrices instead you'll get really funky results. Which is fun, but not useful.

The exception is the camera: you actually want to interpolate the world-to-camera matrix, not camera-to-world. Basically, you always want to interpolate the matrix that is transforming towards the view space, so that means object -> world -> camera.

This is also why I don't mention performance as an advantage of direct matrix interpolation: a ray tracer actually needs the matrices in the camera -> world -> object direction for ray generation and ray traversal, so the performance advantage likely isn't substantial since you'll have to do a matrix inversion after the interpolation.

-

The "scale" component of the resulting decomposition isn't actually a simple scale, but rather is what the original paper calls a "stretch", and requires a full 3x3 matrix. You can think of it as the residual transform that's left over after the translation and rotation have been taken out. When interpolating, you do a simple component-wise interpolation of that matrix.

Importantly, if you instead decomposed to a simple XYZ scale representation you would be throwing away parts of your transformation (e.g. shear, which isn't as uncommon as you might think), which is flagrantly incorrect in the general case.

-

Given the topic of this post, I fear this could come across as a criticism of the PBRT book, which is not my intention at all. On the contrary, I think it's an extraordinary book, and it taught me much of what I know about physically based rendering. I absolutely recommend it to anyone wanting to get into this field, and I have nothing but respect for both the book and its authors.

-

The planet appears to have curved motion paths even when blurred with direct matrix interpolation, but this is an illusion. The motion path of each point on the planet is in fact a straight line, but they collectively create apparent curves due to the same principle behind the curve in this image.

-

If you're a rigger, you're probably well acquainted with the fact that modelers don't always place object origins carefully.

But sometimes there's not even a single consistent place you can put the origin. Imagine, for example, a character wielding a long bo: the bo will be rotating around different points along its length at different times, so there's no single origin you can give it that's consistent with the entire animation.

-

This footnote exists purely to further emphasize the following point: if your renderer is intended for serious use with animated content, supporting multi-segment motion blur is a must. And this is true regardless of what interpolation approach you use.

-

I am considering using decomposed interpolation just for the camera, however, because that seems like a case where it's probably a pure win.